When we first discussed Whole Database synchronization I mentioned that the timing of when updates were applied had to be taken into consideration. In that pattern, we wanted to avoid creating a scenario where the user was interacting with a record from the database (like viewing a campground listing on their phone), and while doing that, have that record go missing because an updated database was applied that lacked that record. In that pattern deletes were handled “for free”, provided we were careful in the aforementioned regard.

In the last chapter, we had our first look at a differential sync algorithm which promised to fix many of the shortcomings of whole database synchronization. We concluded that chapter without bringing up one pesky detail – it doesn’t handle deletions, at all. Let’s illustrate this with an example.

Deletes are Difficult

To better understand how deletes break our differential sync algorithm, let’s consider a simple example. In our example we have Alice, and Ann. Alice is a user of our mobile application, and Ann is an administrator of our database. As an administrator, Ann is responsible for content updates to our system. Now, consider the following series of events:

- Alice downloads our application and performs the initial sync

- Sometime later, Ann creates a new campground named AAA Campground

- Alice syncs her app, and it retrieves the new listing for AAA Campground

- Ann, realizing that a listing for AAA Campground already existed in the system, deletes the more recently created record.

- Alice syncs her app

In this scenario, the desired behavior would be that the more recent AAA Campground listing would be removed from Alice’s mobile device. Unfortunately, this is where desire and reality diverge – Alice’s mobile device will not know to delete the listing, and therefore Alice will continue to see both AAA Campground listings.

What if, after step 5 above, Fred (also a user of the mobile application) downloads the application, and performs the initial sync? If that were to happen, then Fred and Alice would see different sets of data, despite both of their applications saying that they were up-to-date. Only Fred would actually see the correct set of data, while Alice would perpetually have a duplicate listing.

The reason all of this occurs is that the algorithm we’ve discussed so far simply does not handle deletions. On the mobile side of the sync, the application only performs inserts and updates into its local database. On the server-side, the API only knows to provide data based on the contents of its database, and by deleting information from that database, the API lacks the information it needs to inform the client application that a change occurred. These are certainly not ideal dilemmas – fortunately, there are solutions to these conundrums.

Solutions

Now that you have a better understanding of why deletions are troublesome for the algorithm, let’s discuss what solutions exist, and the various pros and cons that each provides.

Avoid Deletions

The easiest problem to fix is the problem that was avoided. The same axiom holds here – if we can negate the need to perform deletions entirely, then we have avoided a huge potential pitfall. The options for avoiding deletes are very use-case specific, and may not be valid for all use-cases.

Track Deletions

If deletions cannot be avoided, one solution is to allow the records to be hard deleted but to implement some form of tracking mechanism. By this mechanism, the application can keep a list of all the record identifiers that have been deleted, and supply that to the client device in order for them to do likewise.

Logical Deletions

An alternative to hard deletes is logical deletes. For those unaccustomed to this concept, it is simply the idea of adding a deleted flag to each record. Records with the flag set to true should be ignored and treated as if they were not in the dataset.

Each of the above options carries its own unique set of benefits and risks, and no one solution is universally best. For this reason, it is not uncommon for an application of sufficient size to implement a mixture of all three. Let’s discuss each of them in more detail and see how they might be implemented.

Avoiding Deletions

This is perhaps the hardest of the three solutions to visualize because it solves the problem by avoiding the problem, and can thusly take many forms. The possibilities here are numerous and could take the form of:

- Analysis to determine that deletes are necessary at all

- Effective dates to avoid the necessity of deletes

- Chained recordsets

Of the above suggestions, effective dates and record chains are the most tangible – because, after all, if you could simply review your requirements and determine that deletes were not necessary, why would we be having this conversation?

Effective dating a record is the process by which records in a dataset have an explicit beginning or ending date (and in some cases both). It is a powerful technique by itself and is often used by applications without any data synchronization needs.

For an example of this, think about insurance documents, specifically automobile insurance. When you purchase auto insurance, your coverage will begin on a specific date and end on a specific date. These dates are the effective begin, and effective end dates.

Now, think about when your auto insurance policy is about to expire. You still have coverage, so you are not yet to the effective-end date, but your insurance company has already issued you your next policy in advance (so that you can, if necessary, print a copy of the proof of insurance card). In the insurance company’s system are now two records for your policy, one which is currently effective, and one not yet effective.

As the above table illustrates, the same policy id has multiple entries, varying only by their effective dates. As each policy year comes, assuming you maintain insurance coverage, a new record will be inserted into the above table – records are never deleted, and that is the crucial element we are looking for.

Such a pattern is not without its downsides, and may not be suitable for every application. One specific consideration is that database queries against such a structure will need to take this into account and be careful to not assume a given record is effective yet (or still effective), but by following this pattern an application will never need to hard delete a record, and thusly work within the sync pattern discussed thus far, and also reap the benefits of being able to track changes over time.

Logical Deletions

Logical deletions often called soft deletions, are those which allow for a given record to be entirely deleted, but do so without physical deleting the record from the database. In other words, the deletions are performed in some other means outside of the SQL delete statement.

This technique, while different from the preceding technique of effective dating a record, can also be used in conjunction with event greater effect. Returning to the auto insurance policy example, an insurance company may use effective dates to track policies, but what does their system do if a policy is created by mistake? For auditing purposes, it is highly unlikely they would want to permanently remove the mistake from their system, but from a practical perspective, the erroneous policy is garbage. Even without the need for data synchronization, an insurance company would likely implement some form of logical deletions to allow for such situations.

In this pattern, a boolean column (typically called deleted) is added to any record that might need to be logically deleted. This column defaults to False (i.e., not deleted), but can be changed at any time to True to logically delete the record. Since logically deleted records are still present in the database, the application itself will need to take care to exclude deleted records in most cases – and, since those records are still present, mobile clients that sync changes will still be able to sync those records. Provided that the client also is aware of logical deletions, then the system is capable of both deleting records, and syncing those deletions.

Tracking Deletions

Sometimes deletes cannot be avoided, whether due to legacy toolsets, a database directly shared among disparate systems, or otherwise, the end result is the same – logical deletions and effective dating records are nonviable due to the application level changes necessary to implement them.

If deletes cannot be avoided, then the system must keep track that the delete occurred in some fashion. One implementation of this is to allow the underlying record to be deleted from the database, but use a separate table to audit this event. This can be accomplished via software, usually on the model but if necessary a database trigger, and when a delete is requested, but before it is actually processed, the database identifier and model name is recorded in a separate table. Let’s look at how an implementation of this might look for deleting reviews from our running example.

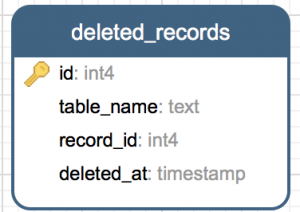

The first thing we will need is a database table to record the deletions – for lack of a better name, we will call it deleted_records.

With the table created, we will need a way of populating it. In part IV of this book we will revisit this pattern when we begin discussing legacy integrations, but for now, let’s limit our discussion to the aforementioned means of populating this table;

Triggers

It is the author’s opinion that database level functionality, such as triggers, should be reserved as a last resort. If at all possible, other options should be explored first – however, if necessary, database triggers can be useful for situations where access to the original source of the application is nonexistent, or where it is otherwise unviable to make modifications to the application.

Code

When possible, it is preferable to track physical deletions via the application layer. Many ORMs have the ability to implement event handlers which are fired before each record is deleted. In such an event handler, logic can be implemented to record the id and type of model which was deleted, into a separate table – called deleted_records in our running example. Assuming your platform’s ORM implements such delete events within the same database transaction as the delete itself, there is no disadvantage to this pattern in terms of data integrity.

Since implementing this pattern in software is preferred, we will spend more time discussing that solution. However, for brevity, let’s first review how you could implement this pattern in the database layer.

In the above example, you can see how a before delete trigger would be implemented in Postgres. If you are using a different database, the syntax will likely vary, but the essence is the same – as records are deleted, capture their id and log that to the deleted_records table.

[TODO segue]

Implementing this pattern in code involves the addition of a model, and the addition of logic that executes before each model is deleted. Let’s look at the model first.

In terms of modeling, the ORM model for DeletedRecord is fairly pedestrian, looking no different than any other model. It is the usage of the DeletedRecords model that is of the most interest.

There are many ways this could be implemented, but they all share one thing in common; they all involve additional logic that is executed as a model is deleted, and also creates an instance of the DeletedRecords model discussed above.

The above modification to the CampgroundReview model illustrates how this typically looks. Before the model is deleted, information is captured from it and stored elsewhere in the application for later retrieval. In this fashion an audit trail is created, despite the original record being deleted.

Propagating Deletions

Tracking deletions, is vital, but by itself it does little to facilitate the needs of data synchronization – to complete this pattern we must also notify the client device that a deletion occurred.

Other patterns outside of tracking deletions do not require this additional concern, as in those patterns records are never removed from the database. In the previous deletion tracking pattern, however, the client needs to be made aware that a deletion occurred, and must mimic that deletion. In our example, the recently created DeletedRecords model provides such a mechanism.

Specifically, the sync API that we first built in the previous chapter will be expanded to provide a more intelligent response to the caller. As presented, the sync API simply returns a list of models in JSON, as no additional information was necessary. Going forward a reply may contain records to insert/update as well as records to delete, and the response JSON needs a way to differentiate those.

To accomplish this, we can separate the JSON response into two concerns – a concern of the data itself, as well as a concern of representing what to do with that information. In the case of a delete, the information itself will be limited to just the ID of the record to delete, whereas for insert/updates the data will be the model in its entirety.

Let’s take a look at how this might look in our example application. Presently our sync API looks like this;

To return meta-data line 6 in the above excerpt will need to be more robust. Before we get ahead of ourselves, though, let’s look at an example of the type of meta-data we are discussing;

In the above JSON you can see how the result itself is still an array, but instead of an array of models, there is additional meta-data. Specifically the addition of an “action” element to the JSON, with options of either “update” or “delete” – “update” in this usage being synonymous with “update or insert”, as previously discussed the patterns we are outlining do not differentiate those two scenarios.

There are a few ways to format this response JSON, including an advanced technique that we will discuss in chapter 8, but for now, let’s take a simpler approach from which we can iterate on later.

The above example uses underscore.js‘s map operator to iterate over the array of model objects, producing a structure similar to the previously outlined JSON. For those unfamiliar with the underscore.js library, the map function is an implementation of the map operator made popular by functional programming and can be found in many non-functional languages via various libraries.

The major flaw is that this is still only working with updates/inserts – we have not yet exposed any deleted records through this API. To address both, the API will need to query both sets of data. The easiest way to do this is by performing multiple queries and then merging those result-sets together in the application layer. As we previously discussed, more advanced solutions to this will be discussed later, but for the time being, performing two independent database queries is not the worst solution.

In fact, since the queries are independent of one another, they can be executed in parallel. Depending on the platform and frameworks you are utilizing, this can range from a trivial optimization to a rather difficult one. For our running example, we can use the async.js library’s parallel function to rather easily perform both queries simultaneously.

The above example illustrates the basic pattern, with implementation details omitted. Simply put, two parallel code paths are described, each with the objective of finding independent data, processing it, and passing process control back to the controller (async.js in this example). Once both parallel tasks have completed, a completion handler runs, processes the result by aggregating the two result sets into a single array, and returning JSON – as previously mentioned.

A final version of this logic would look something like the following.

The logic to retrieve the DeleteRecord model, and the subsequent merging of the two record sets, are the most noteworthy elements here. Merging of the two record sets involves concatenating together two arrays – in our example, no error handling has been performed, to keep the examples as small as possible, but in practical applications, checking for out of bounds conditions would be essential here.

The retrieval of the DeleteRecord model is what remains to be discussed here – let’s take a closer look;

The filter (e.g. SQL where-clause) for retrieving DeleteRecord models is somewhat different from that of the model being synced itself. Specifically, the additional filter of the model name is required to ensure that only delete logs for the specific entity are retrieved, and semantically the updated_at field has been substituted for the functional equivalent deleted_at field – though the latter is purely an implementation detail of this pattern, and could have easily been also called updated_at.

Additional differences occur when processing the models into the data structure the API expects. Since the delete log does not contain more than the id of the deleted record, nor does the caller of this API need more than that, this logic only plucks the id when constructing the desired data structure.

At this point, our example API has all the components necessary to serve up both updates, as well as the ids of deleted records to the caller. The last missing component is to process those on the client device.

Processing Deletions

So far in this chapter, we have discussed deletions from the perspective of the server-side application. Many of the patterns discussed do not impose an additional burden upon the mobile client – for example, logical deletions simple require an additional SQL where-clause to assert that deleted is false when querying records. This is not the case for the pattern of allowing, and logging, physical deletions of records. In that pattern the mobile client must replicate those physical deletions in its own database, otherwise, it runs the risk of defeating the purpose of tracking deletions in the first place.

In the preceding section, we discussed the changes to the API that this pattern necessitates. The modifications to the API in that section altered the structure and meaning of the JSON response, therefore the client must be equally as updated simply to maintain compatibility, let alone to add support for deleted records (which is truly what we are setting out to accomplish).

From a procedural standup, the mobile application’s sync mostly remains unchanged from what we established in chapter 2 – the real differentiators occur when processing the response from the API, specifically in the updateRecords method in our example. The general logic is as follows;

- For each update record, determine if the action is update or delete.

- If update, retrieve the record itself from the record key of the data-structure, and process either a SQL update or insert based upon the existence of that record in the local database.

- If delete, issue a SQL delete based on the id stored in the record key of the data structure.

Let’s take those steps, and see how they would map to the updateRecords method in our example:

Here you can see the overall flow. Every API call will contain a mixture of both updates and deletes, and the mobile application must iterate over every entity and take the correct course of action.

Observant readers may realize that the API from the preceding section has a predictable order in which it will return results – due to how it concatenates two disparate query results into a single structure, we could assume that updates precede deletes when processing the API on the mobile application as well. It would be unwise, however, to assume this. It would be better to leave this as an implementation detail of API, and thusly something that is subject to change. This decision will also prove to be beneficial, in that later chapters will introduce server-side techniques that may change the result ordering, and ideally, we would like to construct a mobile client that is as resilient to that sort of change as possible.

After a determination is made by the above code to either execute an update or delete, the logic branches. The update logic (i.e. step 1a) is identical to that outlined in the last chapter, with step 1b being all that is really new outside of the previously discussed branching logic.

Processing deletions on the mobile client involves trivial logic, and for SQL-based mobile clients like the one in our running example, can be made even more simplistic. SQL-based databases do not object when being asked to delete something that does not exist, and because of this we can, for every delete, simply issue a SQL delete – if the record exists, it will be deleted, if not, nothing happens. This technique not only serves to simplify the code itself but also is a performance optimization. To understand this, consider the alternative;

Had we chosen, for whatever the reason, to not issue blanket delete statements, but to have instead verified the existence of each record before determining if a delete was necessary, the logic would have probably looked something like;

- For each possible delete, query the database for that record by id

- If a record exists, delete it

- If a record does not exist, do nothing

In the best case scenario, the above logic issues one SQL statement per record, which is exactly as many as the more simplified logic of issuing deletes for everything. In the worst case scenario, the above logic issues two SQL statements per record (one to verify that the record exists, and one to delete it). In reality, you can probably expect the number to be somewhere in between.

Additionally, the above logic comes with it the burden of additional code, and it is the author’s opinion that less code is often preferable in situations like this. Finally, the simplified technique we will employe can also be expanded upon, and additionally optimized, using the techniques we will discuss in Part III of this book. Without additional delay, let’s look at what this simplified logic would look like in our running example.

The above logic is a SQL delete statement, simply made more obtuse by error handling and the same type of meta-programming we have seen throughout this book – since we are discussing techniques that can process any type of mobile our example application may have, this is somewhat unavailable.

From a mobile client perspective, this is all that is required to successfully process deletes as most of the work has been made easier by preparations done on the server-side of this process.

Summary

The techniques and patterns discussed in this chapter outline the varying possibilities for handling deletions in terms of data synchronization. It is the author’s experience that a sufficiently mature application will employ a healthy mixture of all of the above-outlined techniques – in part due to none of them being universally better, and also in part due to the organic nature of software development. Rarely is the ideal solution known on the outset of a project, and more often a project’s goal evolves over time, culminating in, from an academic perspective, less than ideal situations that an application must account for.

From a future-proofing perspective, and an eye towards the content we will cover in the next chapter, it is the author’s opinion that implementing the necessary data structures in both the API that we discussed in the section titled Propagating Deletes, as well as the client-side processing discussed in the section titled Processing Deletes, even if hard deletes are not utilized, is wise. The reasoning for this may not be apparent yet, but will be as we begin to become obvious as we discuss access rights in the next chapter. In short, however, treating the client device as a “dumb device” whenever possible carries it advantages – in other words, asking the client device to delete a record by utilizing the techniques discussed in this chapter, does not necessarily imply that a delete actually occured on the server, but rather that content may not be relevant any longer for that specific client device.