Coming Soon

Author Archive

Performance & Scaling

Coming Soon

Client to Server Updates

Thus far we have omitted any discussion of synchronizing data from mobile clients. As edge device capabilities increase, and our demand for data captured from mobile, IoT, and other edge devices increases, so too does the need to synchronize data from these devices.

Techniques for synchronizing from these devices must ensure the reliable, accurate, and timely transmission of data.

Our running example has served us well so far and represents a typical mobile application in these regards – syncing data from a server to store locally for offline usage. As effective as it has been, it will unfortunately not scale well to the discussion ahead without becoming contrived. Therefore the discussion ahead necessitates another example application.

Going forward we will instead use an example of a field agent application, which will fill the role of collecting information from surveyed community members, and reporting the results back to a central web application. This example will serve our needs well going forward, as field agents are mobile, and are therefore not guaranteed to be connected to the Internet at any given point in time. Finally, surveys, census, polls and the like represent something that many people can understand, regardless of industry background.

We will begin constructing this new sample application starting in the next chapter, and continue developing it throughout the remainder of this book. Despite its differences, all the previous patterns discussed are equally as applicable to this new application in the down-sync direction.

Access Rights

The discussion of access rights, and specifically how they are handled is similar in concept to the discussion of deletions. In both scenarios, the sync API needs to be modified to be more general than just exposing a list of changed records, and in this way, either topic can be discussed and approached without regard to order. In other words, they are conceptual siblings and are not dependent upon one another conceptually.

It should come as no surprise then that the implementation of access rights in regards to sync will be similar to deletes, in that they both utilize SQL joins when fetching changed records for the purpose of altering the response of that API. This, however, is where the simplicity of this discussion ends. The implementation of access control can be non-trivial, as a variety of considerations must be taken into account. Let’s briefly take a summary of those potential complexities before analyzing them more fully.

Potential Complexities

The potential complexities, and therefore considerations, that can arise when discussing access rights and how they pertain to data synchronization are varied; however, few applications have the luxury of avoiding them. Additionally, access rights and permissions, in general, are often as varied as the applications in which they are securing. Best practices do exist in the security sector, but the implementation of those best practices can just as varied as the implementations for data synchronization to which we are discussing. It is the intersection of those complexities that lends itself to additional consideration.

For all their variety, the topics for consideration can generally be broken down into three categories:

Excluding Records

The most basic and fundamental consideration of access rights is to exclude certain records from the privy of others. This can take the form of simple ownership, to more fine-grained visibility modifiers, but all share the same common objective of restricting and granting access.

Granting & Revoking Access

Rarely is data static when discussing synchronization, and this is especially more pronounced when it comes to adding and removing permissions over existing data. As a user’s visibility to data changes, so to do the techniques needed to synchronize their data.

Object Hierarchies

Object hierarchies, or the concept of parent/child relationships in data, present unique challenges to access rights when synchronizing data.

Excluding Records

Excluding records due to access rights is perhaps the most fundamental of access control requirements. All types of applications have the concept of permissions, and with that comes limiting visibility of data based on the authenticated user.

Excluding whole records from synchronization involves the conceptually simple process of ignoring those records when the sync API would have otherwise included them. Stated another way, even if a record’s updated_at timestamp would normal indicate its inclusion in a sync, some other attribute of that record would override this decision and therefore exclude it from synchronization. This is perhaps best illustrated by returning to our running example.

In our running example, let’s add the concept of a paid membership to our user model. Paid users in this example will have access to certain information that non-paid users do not – specifically campground records themselves will be marked as either premium or not.

This change can be as straightforward as the addition of a single column, as is illustrated in the diagram above. By adding a campground_tier column to our Campground model, we can alter the synchronization process to key off that field when determining if a given campground should be included in a resultset. Specifically for our example, we will use a value of zero to represent the default tier, and therefore something that is included for everyone, whereas values greater than zero would necessitate that a user has a premium account – this decision is purely arbitrary, however, and simply serves to move our example forward. The DDL for this change can be represented as;

Until this chapter, however, our example has not needed a User model – the examples have intentionally glossed over things such as security or error handling for the purpose of not obfuscating the principals of which we were discussing. The discussion of access rights necessitates that we introduce at least a minimum concept of a user model. For our example, a user may simply have a username, password hash, and access tier (which we will tie back to the campground tier). Therefore, the DDL necessary may look like;

With the users table added to the database, a User model can be created in Javascript as follows;

For brevity, we will not dwell on the details of the model, as its form follows the patterns we have discussed to present, with an exception for a small discussion of the timestamps. In chapter 1, when discussing whole database synchronization, our models lacked such timestamps entirely. In chapter 2, when discussing delta synchornization, we added those retroactively to our example at the cost of lost data – we had to retroactively supply values for both updated_at and created_at for any existing database records. While there may not be any obvious utility in syncing user models, there is also limited downside in adding the necessary timestamp fields on the outset. In fact, doing so from the beginning is the recommended pattern in that should a need arise for them, they will already be there. Following this pattern also allows developers on a project to follow a consistent routine without every developer necessarily having to fully understand the nuances for the pattern (though it is the author’s opinion that every developer would be benefited from such knowledge).

With both the User model in place, as well as a campground_tier property added to the Campground model, we can return our discussion to that of access rights. This is accomplished by comparing the set of potential records to sync with the current user to identify the subset of those records that meet the desired characteristics. In terms of our example, this means campgrounds with specific tiers as correlated to each user’s access_tier.

The above diagram helps to illustrate this concept more tangibly. The large outer circle illustrates all known campgrounds in our sample system, while the smaller blue circle towards the bottom represents all the campgrounds which have been updated since a specific user last synced. Without additional modification to our example system, the blue also represents all the records the sync API will return to a mobile client in this scenario. However, the red circle represents all the free tier campgrounds (e.g. those available to users of our sample application without paid subscriptions), and in this scenario, the user who is trying to sync is not a paid subscriber. Therefore, the desired behavior is not to sync all the blue campgrounds, but rather the intersection of the red and blue circles – the recently updated free campgrounds.

Set operations such as this are what relational databases excel at, and precisely how this restriction of visibility can be best implemented – via SQL joins. By modifying the sync API to join the active user to the campground model, in addition to its current logic, we can easily implement this restriction of visibility. Let’s review what this would look like in code to better help illustrate;

The above code snippet utilizes specific features of the OnionRM library used within the example application, however, the generalities are common to most any database access library. The above code performs a SQL join from campgrounds to users based on the relationship between the user’s access tier and a given campground’s tier. While the above syntax can be jarring, the logic essentially executes the following SQL:

As currently implemented, such logic can only be applied to the Campground model, as no other model in our example should or can be directly joined to the User model like the above code snippet is doing. This presents a difficulty when attempting to incorporate the above logic into the sync API as a whole – how do we permit some models to be restricted based on access rights while limiting others. A naive approach might involve an if-statement, to account for this specific scenario;

The above solution, while correct in behavior, is far from desirable in terms of its implementation. When we discuss object hierarchies later in this chapter we will revisit this and discuss a more general purpose solution, but until then there is still much in the way of clean up and preparation we can do. Specifically, the above approach contains an inner block of duplicate code resulting from the necessity of an outer conditional. This can be solved by abstracting away the data access request into an access chain pattern.

Access Chains

An access chain is a pattern which we will develop throughout the course of this chapter, by which a chained access descriptor will be constructed piecemeal such that a holistic approach to access rights can be asserted over any given piece of data, but without overly burdening any single component of the application with the knowledge of the entirety. Like many concepts, this will make more intuitive sense with exposure and practice. Returning to the dilemma of the previous section, let’s see how we might implement the access chain pattern to remove the redundancy of that previous code snippet. Let’s start by simply stating our goal for that bit of code;

- Find all recently updated records of a given model

- If that model happens to be the Campground model, additional restrict those records based on the logic described in the last section

- Process those records for final output to the client

Stated this way, we can also state that our current implementation duplicates step 1 and 3, whereas ideally, we’d prefer code without any duplication of those three steps. This duplication is the result of the conditionality of the second step – sometimes only the first and last steps are required, other times all three. To solve this we need to construct the query in such a way in the first step that it can be executed as-is, or on a conditional modified, without branching the execution path. The solution, therefore, is to construct the query via a chained set of operations.

Those familiar with jQuery may well be familiar with the pattern of chained objects. An initial jQuery selector is performed and returns another jQuery object which can be further refined until such time a final and desired result is achieved. An example of such jQuery usage might look like:

Has this trivial jQuery analogy required that sometimes the second p tag be hidden, and other times the first, it could instead be written like this:

Usefulness or best practices of the above jQuery code aside, as this is not a book on jQuery, the above does serve to illustrate an analogy of concept to that of access chains. Returning to our sample project, we can apply this pattern via OnionRM’s chained query model in the following fashion:

The initial query is constructed on the first line of the above code sample, whereas the conditional join to users is implemented on lines 2 through 5. By the time the application reaches line 7, and finally runs the query, it always expresses the desired result, regardless of which code path it took. Other ORMs allow for the implementation of this concept, provided they allow queries to be constructed in piecemeal.

Functionally we are left with a piece of software that is no different than it was at the end of the last section but has gone from 20 lines to 13 lines, and in the process has reduced the redundancy of mental complexity significantly.

Throughout the following sections of this chapter we will revisit this pattern and expand upon our usage of it, as doing so will allow for more succinct expression of intent. With this lose-end wrapped up, and pattern established, we can return our discussion to access rights and delve into the complexities of granting and revoking access.

Granting & Revoking Access

Both the addition and removal of access rights has an impact on data synchronization. This is especially noteworthy in the impact it creates for devices which have already synced – creating the opportunity for gaps in synchronized records.

When adding permissions to an already synced user, the existing techniques we first discussed in the delta-based synchronization chapter begin to show their limitation. Specifically, if records are synced based on the intersection of the updated_at timestamp, and each device’s last sync timestamp, then there exists an opportunity by which records could be omitted from syncing to a client device following the addition of additional permissions. The reason for this occurrence is counterintuitive at passing-thought but can be easily expressed with an example.

We have already seen scenarios that illustrate corner cases in synchronization – the scenario we began chapter 2 with when discussing handling deletions. In that scenario, we saw where the timing of events could lead to different representations of data on different users systems, despite both users’ being up-to-date with their sync. Similar timing events can arise when granting or revoking access. Let’s return to our fictitious Alice and Ann from chapter 2. Alice is once again a user of our system, and Ann reprises her role as an administrator of our database. With that background, consider then the following series of events:

- Alice, being an existing free-tier user, is already fully synced.

- The campground titled AAA Deluxe Campground is an existing premium campground in the system.

- The AAA Deluxe Campground is not accessible to Alice, for intended reasons.

- Alice, upgrades to a paid subscription.

- Alice’s device syncs.

At this point, despite Alice having a paid subscription, she still cannot see the AAA Deluxe Campground in her application. She will never see this campground in fact, not unless one of the following were to occur:

- Ann, being the administrator, was to update the campground’s listing.

- Alice was to delete and re-install the app.

Let’s take a moment to analyze why this is.

In the first possible “solution”, the missing campground will be picked up due to its timestamp being updated. In the second, the campground is picked up because the application is forced to re-perform its initial sync. Both of these possibilities hint at the underlying issue – the updated_at timestamp of the AAA Deluxe Campground is older than Alice’s last sync timestamp and is, therefore, being excluded from our existing sync algorithm. Our algorithm to date has never had the possibility for excluding records and has therefore never encountered a scenario where a user might gain access to records without the necessity of their updated_at timestamp also being updated.

To resolve this the algorithm needs to somehow make allowances for retrieving some records that are older than the last sync timestamp.

One possible solution to this is to track when a user’s access tier changes, and use that to essentially re-force the initial sync from the server side. To do this we will first need to add a column to the users table:

And a field and replicated logic to the User model to keep this populated, and to update it when a user’s access_tier changes:

Of particular importance are lines 12 through 14 of the User model. On those lines, within the before save-event, the application is checking to see if a change has occurred to the access_tier field, and if so, it is also setting the last_access_tier_change field to the current date and time. This takes advantage of a feature of the ORM by which a model can be interrogated as to if a field has been modified – this functionality is not unique to OnionRM, and can be found in many other ORMS.

Once the application can properly track when a user’s access_tier has last changed, we can update the sync API to make use of this.

In the above code, we can see the general idea here – modify the API, and on a conditional basis re-perform the initial sync. If the user’s permissions have recently changed, then ignore the timestamp supply and just re-sync everything. This is crude but effective. There will never be a situation where the client device will be missing data, it is easy to implement, but the downside is that it is very wasteful. Especially in situations where user permissions can change frequently or where the dataset is large.

Revoking access involves challenges too. Much like granting access can result in users missing data, revoking access (if not implemented properly) can result in the opposite – users may find themselves still able to see stale copies of data that they should no longer have access to.

The situation arises when a user, having previously been fully synced, loses access to a portion of that data which they have already synced. The key element here is a portion. Had the user lost access to all the data – perhaps in the case of a corporate app that only employees can access – then other, non-synchronization based solutions would be sufficient. In the case of a partial loss of access, the user should still be able to use and sync data within the application, albeit a smaller subset than previously.

In the last chapter, we discussed various techniques for handling deletions, one of which involved replicating hard deletes from the server to the client. If implemented, as records are deleted on the server, those IDs are recorded so that the same records can be deleted at a future date on a client device. This has the added benefit of allowing the server to revoke records from a client device.

To conceptualize this, think of it as virtual deletions. The client device, when told to delete a record with a specific ID, has no means of knowing if such a request is the result of a hard delete, soft delete, or in our current case a revocation of access.

To implement this the server must, when processing sync requests, identify the IDs of all records which a user previously had access to, but does not any longer, and present those IDs in the sync API response as-if they had been deleted.

In our example application, we can find all the campgrounds that a user has access to by joining to the users table on user.access_tier >= campgrounds.campground_tier, so, therefore, it holds to reason that all the campgrounds a user does not have access to can be identified by inverting that relationship.

This only needs to be performed when the user’s last_access_tier_change field is newer than the last sync timestamp supplied to the sync API – in other words, it only needs to be performed when the user’s permissions have changed since they last synced.

Applying these changes to our example sync API involves adding an additional parallel code block. This is a rather unfortunate compromise and is the same compromise we had to make when discussing deletes. As was the case then, this too is logic that will be refactored in Part III of this book when we begin discussing performance and scalability – as this compromise is certainly neither. However, what it lacks in those respects it makes up for in terms of being simple to comprehend and keeps us focused on the task of conceptualizing the logic involved.

The implementation as seen in the immediately-preceding code snippet is presented in its entirety, though the bulk of the code is boilerplate. The core of the logic is isolated to these few lines:

In that logic, we invert the normal criteria for access rights, by asserting the inequality of less-than (as opposed to greater-than-or-equal). Additionally, there are provisions for disregarding the API supplied last sync time, and instead reverting to the point in time when the user’s access rights were updated. These two small, but impactful, changes have the result of syncing virtual deletions records upon access right revocation.

Object Hierarchies

When constructing permission constructs of real-world data, it is not uncommon to encounter parent-child relationships among such entities. These hierarchies of data present a unique challenge, and opportunity, when it comes to synchronization.

For the purpose of accurately modeling a problem space, we might want the relationship between a child and its parent to have a meaningful impact on our application. Doing so not only permits the model to more closely mirror the real world, but it also allows the administrators of such an application to more concisely work with the users and their permissions. Said another way, when the system more closely mirrors the reality of the problem it is solving, the users of that system experience less mental fatigue when performing routine tasks.

Up to this point, however, our synchronization algorithms have essentially dealt with one model at a time. Updating the sync API in this regard involves revisiting the topic of query chains we discussed earlier in this chapter, with the goals of;

- Building a query that joins parent to child

- allowing us to sync the child as-normal

- but, also allowing us to use the characteristics of the parent to additionally restrict which child objects are fetched.

The above figure illustrates the objective. By asserting a relationship between a parent and its child, we can influence the child records fetching during any given sync.

Return briefly to our running example, this could be the case with campground reviews. Currently, our example application has a mechanism for restricting campgrounds synced to a user, but despite that, the reviews of those campgrounds are sync regardless of if the user needs them.

At best this creates waste. Unnecessary objects are synced to client devices, wasting:

- Server resources (e.g. CPU & memory)

- Bandwidth (both client and server)

- Storage space on client devices

In our example, other than being wasteful, syncing these reviews is unlikely to be a consequential oversight. The same cannot be said for all applications and scenarios. If a child record represents private, confidential, or otherwise sensitive information, we would not want to sync those records. An example of this might be an insurance application, where notes are associated with claims. In this scenario, an insurance claim might be visible to only specific people, such as the insurance policyholder. As the claims process is worked, various notes might be added to the claim. these notes might even contain private information, and unnecessarily syncing those to mobile users who do not have permissions over them is not just wasteful, it could also be a legal liability to the insurance company.

Despite our running example not having the same level of criticality as the insurance example we just discussed, it will suffice nevertheless.

To begin we must extend the query chain, but do so in a way that is general – all calls to the sync API must execute the same logic, regardless of whether they belong to a parent object.

One way to accomplish this is to add a method to each model by which it can be interrogated. The logic would look like this:

- The sync API invokes method on object

- The method returns a query chain

- If model has no parent, the chain is the model itself

- If model has a parent, the chain is the model joined to its parent

- The API extends the chain as needed

- The API finally executes the query chain and serializes the results to send to the caller

Steps 3 & 4 in the preceding outline should look familiar, as they are essential steps we discussed in the section on access chains. For that matter, the second step should also be vaguely familiar as it too is essentially similar to the algorithm outlined previously. The real distinction here is that we are pushing this logic into the application’s models, and therefore abstracting this conditional behavior from the API. From the API’s perspective, it treats all models the same, simply differing to it to get the necessary query chain and going from there. Each model is then at the liberty to construct its own access chain as is unique and necessary to that model.

Let’s review how this would be implemented in our sample application.

First, we add a class-level (i.e. static) method to each model called scopedForUser, accepting a single parameter of the currently authenticated user, and returning a query chain for the current model.

The previous code snippet represents the default case for all models. We will circle back to this, but first, let’s update the API to make use of these new methods. Recall that the sync API first creates a query chain based on the user-supplied criteria like this:

This is also the line of code that will need to be updated. Instead of directly starting a query chain, the API should instead delegate this functionality to the model, like this:

At this point, the API remains functional – and, functionally unchanged for that matter. If we were to stop at this point the only downside is that of more obtuse code. Luckily it is not our intention to stop refactoring here, and instead, we will return our focus back to the scopedForUser methods we created earlier.

In the previously outlined steps, we mentioned that our scopedForUser method should return a query chain that either:

- If model has no parent, the chain is the model itself, or

- If model has a parent, the chain is the model joined to its parent

Applying this to our example app, we will leave the Campground model’s scopedForUser alone, as Campground has no parent. The CampgroundPhoto and CampgroundReview models will be updated to join to their parent (Campground).

All that remains is to push the access tier logic into the scopedForUser method, and thusly remove it from the API directly.

For Campground, this is as straightforward as porting the existing logic we previously created in the API. Thusly the Campground’s scopedForUser would look like:

For the CampgroundReview and CampgroundPhoto models, the process is similar. By joining each of those models to the Campground model, we can then update that query chain to perform similar logic as the Campground model itself performs. Thusly, when a campground will have been excluded from a sync, its child objects will also be excluded by the same logic.

Observant readers may have questioned why we ever joined the Campground model to the User model earlier in this chapter. On the surface, it does appear to be a frivolous step. Twice again we have refactored that logic, keeping the join each time – and even adding such logic to additional classes, as we have just done. The reasoning for this is one of a separation of concerns, and a reasoning that would have not been apparent until having first discussed both access chains and future refactoring such chains into the models themselves like we have just done.

By joining the Campground model to the User model we have been able to perform the necessary security checks on the SQL join directly, as opposed to within the Campground model’s usual where-clause. This distinction is important, in that it separates out those such conditions that relate to security. Consider again how the API consumes the scopedForUser method:

From the perspective of the API, the scopedForUser method is a black-box – it has no idea what steps it performs, just that it returns a query chain that can be extended, and it does extended it. With the very last function call in the previously show snippet, it appends addition where-clauses by way of the find method. Suppose for a moment that, either by accident or malice, that the filter supplied to find contained an assertment of the campground’s tier.

Had the scopedForUser method asserted the user’s visibility by validating directly against the same model, then the previous logic would have overwritten such asserts and potentially exposed more data to a user than was intended. By instead joining to another table, and perform security constraints there we avoid the potential for such simple, and yet unfortunate, lapses in security. This change by no means makes the system fool-proof but certainly does a great deal towards that goals.

Mobile

Throughout this chapter, we have focused on server-side changes. The mobile application was not an afterthought in this process, however. Many of the designs we set out were actually crafted with the intention of minimizing, if not entirely preventing, changes in the mobile application. Indeed, a carefully crafted sync API is designed to do just this, as changes on client devices are more expensive than changes on the server. Due to the inherent nature of such systems, one can never have full control over when client devices upgrade. For this reason, a well-designed sync API needs to maintain a degree of backward compatibility, and the more forethought and care are taken to prevent, or limit, changes necessary on the client, the less backward compatibility that needs to be supported going forward.

If not already implemented for the purpose of deletions, the mobile techniques discussed in the last chapter for processing deletions should be implemented at this time. By doing so, even if no true deletions will occur, the techniques outlined in this chapter for virtual deletions can be implemented.

Outside of that one consideration, no additional changes should be necessary for a mobile application to facilitate the techniques discussed in this chapter.

Summary

As we set out to accomplish, we have surveyed some of the various complexities that can arise when introducing permissions and access rights. In the course of discussing the patterns for these challenges, we have also seen the complexity of the sync API increase quite substantially. We have taken some limited steps to reduce, or at least constraint the increase, but we have not set out to refactor that yet.

In part III of this book, we will return to this subject and refactor our API as we prepare to scale – both in terms of API usage as well as API complexity – but, for now, we have completed our initial tour of server to client synchronization, and thusly part I of this book.

Handling Deletions

When we first discussed Whole Database synchronization I mentioned that the timing of when updates were applied had to be taken into consideration. In that pattern, we wanted to avoid creating a scenario where the user was interacting with a record from the database (like viewing a campground listing on their phone), and while doing that, have that record go missing because an updated database was applied that lacked that record. In that pattern deletes were handled “for free”, provided we were careful in the aforementioned regard.

In the last chapter, we had our first look at a differential sync algorithm which promised to fix many of the shortcomings of whole database synchronization. We concluded that chapter without bringing up one pesky detail – it doesn’t handle deletions, at all. Let’s illustrate this with an example.

Deletes are Difficult

To better understand how deletes break our differential sync algorithm, let’s consider a simple example. In our example we have Alice, and Ann. Alice is a user of our mobile application, and Ann is an administrator of our database. As an administrator, Ann is responsible for content updates to our system. Now, consider the following series of events:

- Alice downloads our application and performs the initial sync

- Sometime later, Ann creates a new campground named AAA Campground

- Alice syncs her app, and it retrieves the new listing for AAA Campground

- Ann, realizing that a listing for AAA Campground already existed in the system, deletes the more recently created record.

- Alice syncs her app

In this scenario, the desired behavior would be that the more recent AAA Campground listing would be removed from Alice’s mobile device. Unfortunately, this is where desire and reality diverge – Alice’s mobile device will not know to delete the listing, and therefore Alice will continue to see both AAA Campground listings.

What if, after step 5 above, Fred (also a user of the mobile application) downloads the application, and performs the initial sync? If that were to happen, then Fred and Alice would see different sets of data, despite both of their applications saying that they were up-to-date. Only Fred would actually see the correct set of data, while Alice would perpetually have a duplicate listing.

The reason all of this occurs is that the algorithm we’ve discussed so far simply does not handle deletions. On the mobile side of the sync, the application only performs inserts and updates into its local database. On the server-side, the API only knows to provide data based on the contents of its database, and by deleting information from that database, the API lacks the information it needs to inform the client application that a change occurred. These are certainly not ideal dilemmas – fortunately, there are solutions to these conundrums.

Solutions

Now that you have a better understanding of why deletions are troublesome for the algorithm, let’s discuss what solutions exist, and the various pros and cons that each provides.

Avoid Deletions

The easiest problem to fix is the problem that was avoided. The same axiom holds here – if we can negate the need to perform deletions entirely, then we have avoided a huge potential pitfall. The options for avoiding deletes are very use-case specific, and may not be valid for all use-cases.

Track Deletions

If deletions cannot be avoided, one solution is to allow the records to be hard deleted but to implement some form of tracking mechanism. By this mechanism, the application can keep a list of all the record identifiers that have been deleted, and supply that to the client device in order for them to do likewise.

Logical Deletions

An alternative to hard deletes is logical deletes. For those unaccustomed to this concept, it is simply the idea of adding a deleted flag to each record. Records with the flag set to true should be ignored and treated as if they were not in the dataset.

Each of the above options carries its own unique set of benefits and risks, and no one solution is universally best. For this reason, it is not uncommon for an application of sufficient size to implement a mixture of all three. Let’s discuss each of them in more detail and see how they might be implemented.

Avoiding Deletions

This is perhaps the hardest of the three solutions to visualize because it solves the problem by avoiding the problem, and can thusly take many forms. The possibilities here are numerous and could take the form of:

- Analysis to determine that deletes are necessary at all

- Effective dates to avoid the necessity of deletes

- Chained recordsets

Of the above suggestions, effective dates and record chains are the most tangible – because, after all, if you could simply review your requirements and determine that deletes were not necessary, why would we be having this conversation?

Effective dating a record is the process by which records in a dataset have an explicit beginning or ending date (and in some cases both). It is a powerful technique by itself and is often used by applications without any data synchronization needs.

For an example of this, think about insurance documents, specifically automobile insurance. When you purchase auto insurance, your coverage will begin on a specific date and end on a specific date. These dates are the effective begin, and effective end dates.

Now, think about when your auto insurance policy is about to expire. You still have coverage, so you are not yet to the effective-end date, but your insurance company has already issued you your next policy in advance (so that you can, if necessary, print a copy of the proof of insurance card). In the insurance company’s system are now two records for your policy, one which is currently effective, and one not yet effective.

As the above table illustrates, the same policy id has multiple entries, varying only by their effective dates. As each policy year comes, assuming you maintain insurance coverage, a new record will be inserted into the above table – records are never deleted, and that is the crucial element we are looking for.

Such a pattern is not without its downsides, and may not be suitable for every application. One specific consideration is that database queries against such a structure will need to take this into account and be careful to not assume a given record is effective yet (or still effective), but by following this pattern an application will never need to hard delete a record, and thusly work within the sync pattern discussed thus far, and also reap the benefits of being able to track changes over time.

Logical Deletions

Logical deletions often called soft deletions, are those which allow for a given record to be entirely deleted, but do so without physical deleting the record from the database. In other words, the deletions are performed in some other means outside of the SQL delete statement.

This technique, while different from the preceding technique of effective dating a record, can also be used in conjunction with event greater effect. Returning to the auto insurance policy example, an insurance company may use effective dates to track policies, but what does their system do if a policy is created by mistake? For auditing purposes, it is highly unlikely they would want to permanently remove the mistake from their system, but from a practical perspective, the erroneous policy is garbage. Even without the need for data synchronization, an insurance company would likely implement some form of logical deletions to allow for such situations.

In this pattern, a boolean column (typically called deleted) is added to any record that might need to be logically deleted. This column defaults to False (i.e., not deleted), but can be changed at any time to True to logically delete the record. Since logically deleted records are still present in the database, the application itself will need to take care to exclude deleted records in most cases – and, since those records are still present, mobile clients that sync changes will still be able to sync those records. Provided that the client also is aware of logical deletions, then the system is capable of both deleting records, and syncing those deletions.

Tracking Deletions

Sometimes deletes cannot be avoided, whether due to legacy toolsets, a database directly shared among disparate systems, or otherwise, the end result is the same – logical deletions and effective dating records are nonviable due to the application level changes necessary to implement them.

If deletes cannot be avoided, then the system must keep track that the delete occurred in some fashion. One implementation of this is to allow the underlying record to be deleted from the database, but use a separate table to audit this event. This can be accomplished via software, usually on the model but if necessary a database trigger, and when a delete is requested, but before it is actually processed, the database identifier and model name is recorded in a separate table. Let’s look at how an implementation of this might look for deleting reviews from our running example.

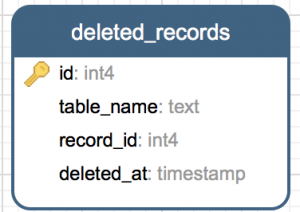

The first thing we will need is a database table to record the deletions – for lack of a better name, we will call it deleted_records.

With the table created, we will need a way of populating it. In part IV of this book we will revisit this pattern when we begin discussing legacy integrations, but for now, let’s limit our discussion to the aforementioned means of populating this table;

Triggers

It is the author’s opinion that database level functionality, such as triggers, should be reserved as a last resort. If at all possible, other options should be explored first – however, if necessary, database triggers can be useful for situations where access to the original source of the application is nonexistent, or where it is otherwise unviable to make modifications to the application.

Code

When possible, it is preferable to track physical deletions via the application layer. Many ORMs have the ability to implement event handlers which are fired before each record is deleted. In such an event handler, logic can be implemented to record the id and type of model which was deleted, into a separate table – called deleted_records in our running example. Assuming your platform’s ORM implements such delete events within the same database transaction as the delete itself, there is no disadvantage to this pattern in terms of data integrity.

Since implementing this pattern in software is preferred, we will spend more time discussing that solution. However, for brevity, let’s first review how you could implement this pattern in the database layer.

In the above example, you can see how a before delete trigger would be implemented in Postgres. If you are using a different database, the syntax will likely vary, but the essence is the same – as records are deleted, capture their id and log that to the deleted_records table.

[TODO segue]

Implementing this pattern in code involves the addition of a model, and the addition of logic that executes before each model is deleted. Let’s look at the model first.

In terms of modeling, the ORM model for DeletedRecord is fairly pedestrian, looking no different than any other model. It is the usage of the DeletedRecords model that is of the most interest.

There are many ways this could be implemented, but they all share one thing in common; they all involve additional logic that is executed as a model is deleted, and also creates an instance of the DeletedRecords model discussed above.

The above modification to the CampgroundReview model illustrates how this typically looks. Before the model is deleted, information is captured from it and stored elsewhere in the application for later retrieval. In this fashion an audit trail is created, despite the original record being deleted.

Propagating Deletions

Tracking deletions, is vital, but by itself it does little to facilitate the needs of data synchronization – to complete this pattern we must also notify the client device that a deletion occurred.

Other patterns outside of tracking deletions do not require this additional concern, as in those patterns records are never removed from the database. In the previous deletion tracking pattern, however, the client needs to be made aware that a deletion occurred, and must mimic that deletion. In our example, the recently created DeletedRecords model provides such a mechanism.

Specifically, the sync API that we first built in the previous chapter will be expanded to provide a more intelligent response to the caller. As presented, the sync API simply returns a list of models in JSON, as no additional information was necessary. Going forward a reply may contain records to insert/update as well as records to delete, and the response JSON needs a way to differentiate those.

To accomplish this, we can separate the JSON response into two concerns – a concern of the data itself, as well as a concern of representing what to do with that information. In the case of a delete, the information itself will be limited to just the ID of the record to delete, whereas for insert/updates the data will be the model in its entirety.

Let’s take a look at how this might look in our example application. Presently our sync API looks like this;

To return meta-data line 6 in the above excerpt will need to be more robust. Before we get ahead of ourselves, though, let’s look at an example of the type of meta-data we are discussing;

In the above JSON you can see how the result itself is still an array, but instead of an array of models, there is additional meta-data. Specifically the addition of an “action” element to the JSON, with options of either “update” or “delete” – “update” in this usage being synonymous with “update or insert”, as previously discussed the patterns we are outlining do not differentiate those two scenarios.

There are a few ways to format this response JSON, including an advanced technique that we will discuss in chapter 8, but for now, let’s take a simpler approach from which we can iterate on later.

The above example uses underscore.js‘s map operator to iterate over the array of model objects, producing a structure similar to the previously outlined JSON. For those unfamiliar with the underscore.js library, the map function is an implementation of the map operator made popular by functional programming and can be found in many non-functional languages via various libraries.

The major flaw is that this is still only working with updates/inserts – we have not yet exposed any deleted records through this API. To address both, the API will need to query both sets of data. The easiest way to do this is by performing multiple queries and then merging those result-sets together in the application layer. As we previously discussed, more advanced solutions to this will be discussed later, but for the time being, performing two independent database queries is not the worst solution.

In fact, since the queries are independent of one another, they can be executed in parallel. Depending on the platform and frameworks you are utilizing, this can range from a trivial optimization to a rather difficult one. For our running example, we can use the async.js library’s parallel function to rather easily perform both queries simultaneously.

The above example illustrates the basic pattern, with implementation details omitted. Simply put, two parallel code paths are described, each with the objective of finding independent data, processing it, and passing process control back to the controller (async.js in this example). Once both parallel tasks have completed, a completion handler runs, processes the result by aggregating the two result sets into a single array, and returning JSON – as previously mentioned.

A final version of this logic would look something like the following.

The logic to retrieve the DeleteRecord model, and the subsequent merging of the two record sets, are the most noteworthy elements here. Merging of the two record sets involves concatenating together two arrays – in our example, no error handling has been performed, to keep the examples as small as possible, but in practical applications, checking for out of bounds conditions would be essential here.

The retrieval of the DeleteRecord model is what remains to be discussed here – let’s take a closer look;

The filter (e.g. SQL where-clause) for retrieving DeleteRecord models is somewhat different from that of the model being synced itself. Specifically, the additional filter of the model name is required to ensure that only delete logs for the specific entity are retrieved, and semantically the updated_at field has been substituted for the functional equivalent deleted_at field – though the latter is purely an implementation detail of this pattern, and could have easily been also called updated_at.

Additional differences occur when processing the models into the data structure the API expects. Since the delete log does not contain more than the id of the deleted record, nor does the caller of this API need more than that, this logic only plucks the id when constructing the desired data structure.

At this point, our example API has all the components necessary to serve up both updates, as well as the ids of deleted records to the caller. The last missing component is to process those on the client device.

Processing Deletions

So far in this chapter, we have discussed deletions from the perspective of the server-side application. Many of the patterns discussed do not impose an additional burden upon the mobile client – for example, logical deletions simple require an additional SQL where-clause to assert that deleted is false when querying records. This is not the case for the pattern of allowing, and logging, physical deletions of records. In that pattern the mobile client must replicate those physical deletions in its own database, otherwise, it runs the risk of defeating the purpose of tracking deletions in the first place.

In the preceding section, we discussed the changes to the API that this pattern necessitates. The modifications to the API in that section altered the structure and meaning of the JSON response, therefore the client must be equally as updated simply to maintain compatibility, let alone to add support for deleted records (which is truly what we are setting out to accomplish).

From a procedural standup, the mobile application’s sync mostly remains unchanged from what we established in chapter 2 – the real differentiators occur when processing the response from the API, specifically in the updateRecords method in our example. The general logic is as follows;

- For each update record, determine if the action is update or delete.

- If update, retrieve the record itself from the record key of the data-structure, and process either a SQL update or insert based upon the existence of that record in the local database.

- If delete, issue a SQL delete based on the id stored in the record key of the data structure.

Let’s take those steps, and see how they would map to the updateRecords method in our example:

Here you can see the overall flow. Every API call will contain a mixture of both updates and deletes, and the mobile application must iterate over every entity and take the correct course of action.

Observant readers may realize that the API from the preceding section has a predictable order in which it will return results – due to how it concatenates two disparate query results into a single structure, we could assume that updates precede deletes when processing the API on the mobile application as well. It would be unwise, however, to assume this. It would be better to leave this as an implementation detail of API, and thusly something that is subject to change. This decision will also prove to be beneficial, in that later chapters will introduce server-side techniques that may change the result ordering, and ideally, we would like to construct a mobile client that is as resilient to that sort of change as possible.

After a determination is made by the above code to either execute an update or delete, the logic branches. The update logic (i.e. step 1a) is identical to that outlined in the last chapter, with step 1b being all that is really new outside of the previously discussed branching logic.

Processing deletions on the mobile client involves trivial logic, and for SQL-based mobile clients like the one in our running example, can be made even more simplistic. SQL-based databases do not object when being asked to delete something that does not exist, and because of this we can, for every delete, simply issue a SQL delete – if the record exists, it will be deleted, if not, nothing happens. This technique not only serves to simplify the code itself but also is a performance optimization. To understand this, consider the alternative;

Had we chosen, for whatever the reason, to not issue blanket delete statements, but to have instead verified the existence of each record before determining if a delete was necessary, the logic would have probably looked something like;

- For each possible delete, query the database for that record by id

- If a record exists, delete it

- If a record does not exist, do nothing

In the best case scenario, the above logic issues one SQL statement per record, which is exactly as many as the more simplified logic of issuing deletes for everything. In the worst case scenario, the above logic issues two SQL statements per record (one to verify that the record exists, and one to delete it). In reality, you can probably expect the number to be somewhere in between.

Additionally, the above logic comes with it the burden of additional code, and it is the author’s opinion that less code is often preferable in situations like this. Finally, the simplified technique we will employe can also be expanded upon, and additionally optimized, using the techniques we will discuss in Part III of this book. Without additional delay, let’s look at what this simplified logic would look like in our running example.

The above logic is a SQL delete statement, simply made more obtuse by error handling and the same type of meta-programming we have seen throughout this book – since we are discussing techniques that can process any type of mobile our example application may have, this is somewhat unavailable.

From a mobile client perspective, this is all that is required to successfully process deletes as most of the work has been made easier by preparations done on the server-side of this process.

Summary

The techniques and patterns discussed in this chapter outline the varying possibilities for handling deletions in terms of data synchronization. It is the author’s experience that a sufficiently mature application will employ a healthy mixture of all of the above-outlined techniques – in part due to none of them being universally better, and also in part due to the organic nature of software development. Rarely is the ideal solution known on the outset of a project, and more often a project’s goal evolves over time, culminating in, from an academic perspective, less than ideal situations that an application must account for.

From a future-proofing perspective, and an eye towards the content we will cover in the next chapter, it is the author’s opinion that implementing the necessary data structures in both the API that we discussed in the section titled Propagating Deletes, as well as the client-side processing discussed in the section titled Processing Deletes, even if hard deletes are not utilized, is wise. The reasoning for this may not be apparent yet, but will be as we begin to become obvious as we discuss access rights in the next chapter. In short, however, treating the client device as a “dumb device” whenever possible carries it advantages – in other words, asking the client device to delete a record by utilizing the techniques discussed in this chapter, does not necessarily imply that a delete actually occured on the server, but rather that content may not be relevant any longer for that specific client device.

Whole Database Synchronization

Whole database synchronization is the process of synchronizing a client device with data from a server by means of sending a pre-generated database file. This typically takes the form of an SQLite database file, due to SQLite’s ubiquity in mobile and embedded applications. Technically though, this pattern can be implemented with any type of database file, even including pre-generated JSON or XML encoded serialized datasets. It is not a perfect pattern, but it can be useful and does serve as a good foundation from which we can better understand and appreciate the more advanced patterns that we will discuss throught this book.

Source Code Samples

As a quick side note, samples and accompanying files for this chapter can be found at the following locations;

Pros and Cons

Before we get ahead of ourselves though, let’s discuss some of the criteria that are recommended for this pattern to be a good fit:

The synchronization is one-way, server to client

There are patterns for addressing two-way synchronization, or even just client to server synchronization, but this is not one of them. We will address those in a later chapter, but for now, this pattern is focused only on use cases involving sending data in one direction, and that is from the server to client devices.

The data changes infrequently

This is an inherently subjective qualification, but an important one nevertheless. As we will see later in this chapter, updates that occur too frequently will pose big problems for this pattern and ultimately negate any benefit gained from the ease of implementation. Ultimately you’ll need to determine how frequently is too frequent, but don’t expect this pattern to scale to daily or possibly even weekly updates for all but the smallest datasets.

All users have access to generally all the same data

This isn’t so much a technical requirement as it is a requirement of practicality. Technically you can pre-generate a unique database file per user of your system, but in practice, you will find that this doesn’t scale. Instead, this pattern is more ideally suited for when all of your users have access to the same data, and thusly you only have to generate one database file.

The dataset is relatively small

Very much like the requirement that your data should change infrequently, this too is very subjective. Additionally, datasets that are smaller can afford to be updated more frequently without putting too large of a demand on your users, while larger datasets will necessitate a slower update frequency.

This last bullet and its relationship to update frequency can be best understood if you take into consideration either the bandwidth limitations or the data limitations that your client devices will utilize. For example, it is not uncommon for mobile devices to have a metered amount of data per month, so in that context, a 200-megabyte dataset that is updated monthly will roughly put the same demand on your users as a 50-megabyte dataset that is updated weekly. There are other dynamics to consider as well, but we’ll reserve those for discussion later in this chapter – for now, let’s look at why we might not want to use this pattern.

The situations where you might not want to use this pattern are generally expressed in terms of the inverse of the requirements. Stated less obtusely, you’ll not want to use this pattern if any of the following are true for your use-case;

- Two-way synchronization is necessary

- The data updates frequently

- Users often only see a subset of data tied to their profile by permissions, memberships, etc.

- The dataset itself is larger than some arbitrary size

Now that we have a better understanding of when this pattern is useful, and when an application might need a more advanced synchronization pattern, let’s dive into the details of how this algorithm works.

Algorithm Overview

The algorithm for replacing the whole dataset generally has five stages;

- A batch process reads all the data that is relevant to the mobile application, reformatting, condensing, and redacting as necessary

- An SQLite database is generated with the data from step 1 and stored for later retrieval

- A process on the mobile device makes an API call to check for updates

- The API, knowing that an updated Sqlite database was generated in step 2, serves up this content to the mobile device.

- The mobile device replaces its existing database with the newer one it just received.

Overview of sync algorithm

As is seen in the above overview, this pattern involves two actors outside of the mobile application – a batch process to generate SQLite databases, and an API to serve them up to the mobile device. The general idea behind this separation of concerns is based on that of processing time. The generation of the SQLite database is assumed to take a non-trivial amount of time and to be the same from one mobile client to another. For these reasons, it makes more sense to not burden the API with generating this file. Instead, this is generated once and served upon request of the mobile application.

The batch process represents the bulk of the logic in this pattern. It must know the desired database schema of the mobile device, understand the database schema of the primary database, and know how to translate between them. Once completed it must then write the generated SQLite file to a centralized location like Amazon S3 so that it can be accessed by the API, presumably running on a different server from the batch process.

The synchronization logic in the mobile application is simplified in this pattern thanks in part to the work done by the batch process. By pre-generating the entire database file in the exact format the mobile client will need, the mobile client is then freed of most responsibilities other than the downloading and swapping out of files – though as we’ll discuss later, those responsibilities are not without their own pitfalls.

Implementation

To better conceptualize this pattern, let’s return to the campground finder app I briefly mention in the earlier in this book. Such an application would generally provide users with a read-only view of their data, with all users having the same access to that data, and with updates occurring only once a quarter. In other words, a perfect fit for this pattern!

For our example let’s start with something simple in terms of a data model – a listing of campgrounds, their ratings, reviews, photos, and other details.

Schema Overview

The above schema diagram illustrates what this data model looks like in Postgres. Those same tables can be created with the following DDL;

Luckily this example maps fairly easily to SQLite, as we’ve yet to use any of the advanced features of Postgres (like JSON storage for example). In a later chapter we’ll explore techniques for handling disparate system capabilities, but for now, our mapping is straightforward.

The schema for the server side of the application (listed above), can be generally mapped to SQLite as follows:

To make this section easier to follow along with, here is some sample data. You’re welcome to skip this and use your own data or make up fake records as you see fit, but having something in these tables will make development and testing of this and future patterns all the easier.

Sample Data Download: sample_data.sql

Server Side Components

With the schema in place and data inserted into those tables, we can focus on creating the database extraction logic. Knowing the schemas of both systems, and how they map to each other (as outlined in the previous table) is key for this step. Let’s look at how an implementation of this process might look.

The above code might look obtuse if you’re not familiar with the frameworks, but it’s actually pretty straightforward once you get familiar with it. Let’s break it down into its various sections for further clarity.

First, we generate the three tables we’re working with inside a fresh SQLite database. The reason this works is that SQLite, unlike most other databases, requires no initial setup – a blank file is a blank SQLite database. So, by opening an SQLite connection to a file that does not exist, and running create statements, we can begin to populate the database schema.

For database schemas that are more complex than this, it might be worthwhile to take a slightly different approach. In those situations creating the entire schema in code might clutter the application, and distract developers from being able to more easily maintain the application. Instead, an SQLite database can be created manually, outside of the application, and already contain all the necessary schema, just without any data. In place of the above lines of code, the application would instead copy this database “template” to a new filename before opening an SQLite connection and forgo any DDL logic in code.

For the purposes of this example, however, it was easier to proceed this route due to the need to present this alternative and this example’s small schema.

With the schema in place, our next goal is to populate the data. This is accomplished by first extracting all the models from Postgres, and iterating over them and inserting them into SQLite. Since this involves loading the entire contents of the table into memory, this should only be performed on relatively small tables. For larger tables, some form of pagination is necessary, as to avoid needing to keep all the records in memory at once. Considering the limited scope of this pattern, it is perhaps best to avoid such complexities here and err on the side of simplicity.

Such a process is relatively unintelligent as it regenerates everything from scratch, without consideration for if a record has actually changed. In the next chapter we’ll start seeing techniques for how to perform differential synchronization, but for now, we will just have to be mindful to balance how frequently we run this process. Some use cases might necessitate updates as infrequently as yearly or quarterly, while others might prefer a monthly or weekly refresh of data. Great care must be taken with this pattern to balance the desire to keep content fresh, with the consideration of infrequent application users.

Updating too infrequently has obvious drawbacks, as changes will be delayed up to the number of days in the refresh cycle. In a scenario where the mobile dataset is updated once every two weeks, the worst-case scenario is that mobile users will have to wait two weeks before seeing an update. This can be mitigated by increasing the refresh frequency from once every two weeks to once every week, or even more frequently. At some point you run the risk of forcing your users to download the entire dataset on some interval when only a fraction of the data has even changed – or worse, nothing has changed.

Before we get too far ahead of ourselves with this consideration, let’s first review how the API and mobile components of this process works.

The API in this pattern is fairly basic, as most of the heavy lifting has been offloaded to the previously discussed Sqlite generation process.

The API takes a database file checksum that it will use to determine if the caller needs an updated database, or if they already have the latest version. Checking the database version with a checksum verification serves a dual purpose.

- To allow for easy database versioning – no additional information outside of an SQLite database file is necessary.

- If a database becomes corrupt, for whatever reason, an update will be triggered and hopefully resolve the situation.

When the API detects that the checksum the caller provided matches the current database file’s checksum, it simply returns an HTTP 304 status code – short of “Not Modified” – and nothing else. The database file is not transferred to the caller, avoiding unnecessary bandwidth usage. The caller – the mobile application in our example – will need to check the HTTP status codes to make the determination of what to do when this API call returns to them.

Mobile Updates

The mobile side of this pattern consists of a process to check if updates are available, and apply them if they are. Since this pattern generates a whole SQLite database on the server the mobile application only has to download and save the updated SQLite database in order to apply these changes. Let’s look at that logic now;

In this example, we can see the two primary methods we said we needed – one for downloading the updates and one for applying them. There is also some boilerplate logic for ensuring that our sync helper is a singleton, a pattern that I have found works well for sync workers. We will not spend too much time discussing this, except to say that this pattern allows us to have a single instance of this class serve the whole application without the mess and clutter that static classes bring with them. In this example, so early in this book, it might be hard to see the value this is driving, but as our sync worker becomes more complex later on this is a pattern that will serve us well. Considering everything going on in the above code sample, it is worth analyzing its various components before moving on.

Starting at the bottom we can see two methods, isUpdatePending and hasPerformedInitialSync. These are two helper methods that assist in determining the state of the application sync. In this pattern the sync process can be in a number of states;

Initial sync necessary

This is the default state of the application. The application has recently been installed and has no local database. An initial sync must be performed before the application is usable.

No update pending

In this state, the application has completed its initial sync (see above), but has no pending update that is ready to be applied. This could be because the application already has the latest version of the database, is in the process of downloading an update, or possibly has not yet checked for updates. Regardless of the underlying scenario, there are currently no updates that are pending installation.

Update pending

The final state signifies that an updated database file has been successfully downloaded and is ready to be applied at the application’s discretion. After the update is eventually applied, the sync process transition back to the no update pending state.

The above two helper methods allow the application to determine which of those three states the sync process is in. The observant will probably notice the possibility for a fourth state, due to the usage of two boolean values to represent our three states. This is true in theory, but in practice does not occur. This is illustrated in the below table;

The false, forth, state would represent that an update is available but the initial sync has not yet been performed. Short of a bug in the application itself, this is a situation that should never actually occur. This is ensured by virtue of how the downloadUpdates method performs the initial sync, which we will look at soon. But first, let’s look at the applyOutstandingUpdates method.

This method is responsible for, as its name would imply, applying any outstanding database updates. This function does not check to determine if the existing database is currently in use, only that a newer database file exists. If a newer file exists, it moves it on top of the previous database, thusly finalizing the update. One detail you can see in both this method and the previous isUpdatePending method, is that pending updates are simply database files that are given an alternate name. The downloadUpdates method is responsible for creating those files.

The first part of the downloadUpdates method pertains to computing the checksum that will be supplied to the API. As we discussed earlier in this chapter, the API compares the checksum supplied by the caller to the current database for purposes of determining whether to reply with the database in full or to short-circuit and reply with an HTTP 304 (Not Modified) status code; because of this, the above code must supply either no checksum at all, the checksum of the current database, or the checksum of the most recently downloaded, but not yet applied, database. The rationale behind this last scenario – supplying the most recently downloaded database – has to do with the three sync states discussed earlier, and the timing of database updates. For example, a database may have an already been downloaded, but not yet applied, when the application goes to check for updates again. In this scenario the desired effect is to not download the same file again; however, what if a newer database does exist, or the previous download is correct? In those scenarios, we would want the application to download the database again, and thusly why the above logic is structured as it is.

In the last section of the downloadUpdates method, we can see the logic that is actually responsible for making the API call an processing the result. The processing of the result is particularly noteworthy. In it we see that the application expects to see either an HTTP 200 (OK) or an HTTP 304 (Not Modified), anything else is ignored. Moreover, the logic that checks for HTTP 304 is procedurally unnecessary, existing simply for clarity and to document that the application is intentionally ignoring that return code.

Observant readers may have noticed the initialSync variable that was defined in the earlier snippet of the downloadUpdates method. In this second half of the method we can see how that variable is being; when an initial sync occurs, the application must write the database out directly to the database.db file, therefore downloading and applying the update in a single step. In all other scenarios, the recently downloaded file must first be written to an intermediate file – updated.db – to avoid replacing the existing database while it is potentially in use.

In fact, the largest consideration in this entire process is ensuring that the existing database is not in use when attempting to replace it with a newer copy. We will have to be mindful when calling the above applyOutstandingUpdates function – calling it at the wrong time can cause corruption of the database itself or possibly application crashes – likely both. This is not something we want, so special considerations must be made.

There are generally two ways to ensure that a database can be safely replaced;

- Only replace it on application launch or shutdown – therefore ensuring users are not yet, or no longer, using the previous database

- Replace it while the application is running

The first option – to replace the database only when the application launches or stops – is the safest and easiest to implement, so we’ll look at that one first.

In the above example, we see that the application checks for new updates during launch, and then applies them when it exits. Generally speaking, this pattern works well for ensuring that the database is not in use by the application when it’s being updated. Unfortunately, as mobile applications have grown in their abilities, they are also running in the background for longer periods of time. This means that an application’s exit event handler may not be called when an application goes into the background, and therefore this means that this pattern’s database update may not be applied for some indeterministic amount of time.

The solution to this is to allow the database to be updated while the application is running. The complexity of this approach varies greatly based on the dataset and the application itself. Generally, the following conditions greatly increase the complexity;

- Datasets where records can be deleted

- Datasets without primary keys

- Datasets with time-sensitive elements (e.g. effective dates, expiration dates on listings, etc)

In short, we are talking about situations where we can either not uniquely identify a row in the database from one version of the data to the next, or situations where records might go away (either logically or physically) between versions. Ensuring these conditions will not occur may be difficult or even unavailable, and since we’re replacing the whole database anyway it is usually best to assume the worst and plan for those scenarios.

In this procedure, the most complex factor is ensuring that the database is not currently being used. Since the patterns discussed in this section revolve around replacing the entire SQLite database it is crucial to ensure that this is accomplished when there are no open connections to it.

There are many ways to ensure that no database connections are open, but perhaps the simplest way is to ask the user. By presenting the user with a notification that a database update is available, and asking them if they’d like to apply that now, you’re accomplishing two things. Firstly, you’re providing feedback to the user that new and updated data is available in the application, and secondly, you’re ensuring that the user is not currently on any application screens and therefore should not have any open connections to the database that cannot otherwise be closed.

Such a technique might not fit the type of application you’re wanting to create, as you might prefer to not bother the user to have to do a manual step. There are other techniques that could be employed to allow the database to be updated without intruding upon the user, such as;

- Locking the database to ensure atomic access, and therefore ensuring an opportune time to upgrade

- Tracking open connections, and upgrading when the connection count goes to zero

However, it is the author’s opinion that such techniques are seldom worth the complexity. If your application needs anything more robust than either replacing the database during startup/shutdown or via manual user request, perhaps your application itself is too complex for a pattern as simple as the one outlined in this chapter, and would most likely benefit from one of the other more robust syncing mechanism discussed in later chapters.